Understanding Operating Systems

Operating System Definition and Function

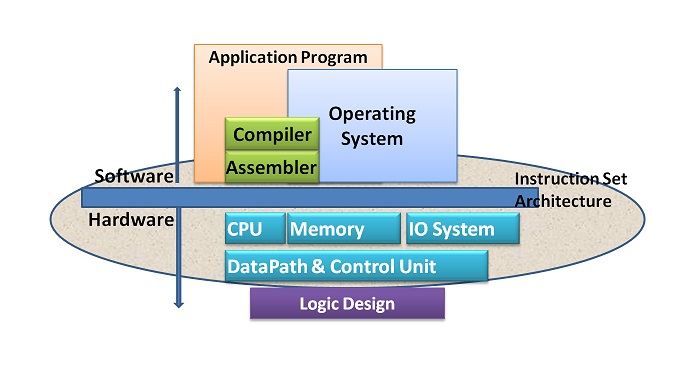

An operating system can be defined as an interface between the user and the hardware. In the realm of computing, hardware components operate using machine code—binary digits of 0s and 1s—which are incomprehensible to most users. The operating system bridges this gap by providing a user-friendly environment where tasks can be performed conveniently and efficiently.

The Role of Operating Systems in Computing

- Process Execution: The OS manages the execution of all processes, ensuring that each application runs smoothly without interfering with others.

- Resource Allocation: It efficiently allocates hardware resources like CPU time, memory space, and input/output devices among various programs.

- File Management: The OS handles the creation, deletion, reading, and writing of files, organizing data storage for easy access and modification.

- Security and Access Control: It enforces security protocols to protect data integrity and restrict unauthorized access.

- Error Detection and Handling: The OS monitors the system for errors, preventing system crashes and data loss by handling exceptions effectively.

By abstracting the complexities of hardware interactions, the operating system enables developers and users to focus on application functionality and user experience.

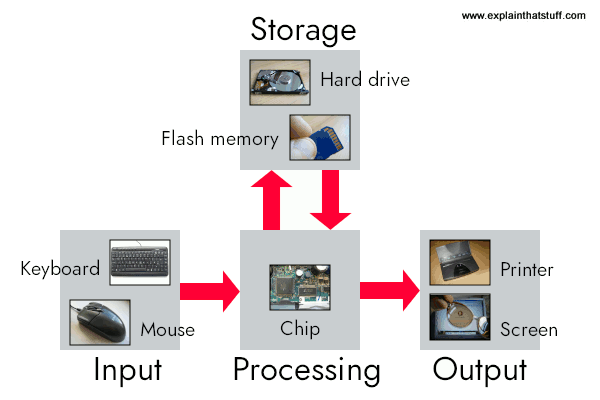

Structure of a Computer System

Understanding the structure of a computer system is pivotal for grasping how operating systems function within the broader context of computing environments.

Components of a Computer System

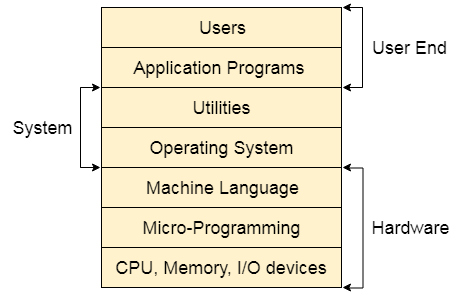

Users: Individuals who interact with the computer system to perform tasks ranging from simple data entry to complex computational operations.

Application Programs: Software designed to carry out specific tasks for users, such as word processors, web browsers, games, and database systems.

System Programs: Utilities and tools like compilers, editors, and command-line shells that facilitate application development and system management.

Operating System: The intermediary that manages hardware resources and provides services to application and system programs.

Hardware: The physical components of the computer system, including the Central Processing Unit (CPU), memory modules, storage devices, and peripheral equipment.

The Layered Architecture

The computer system’s architecture is often conceptualized in layers, where each layer relies on the functionality provided by the layer beneath it. This modular approach enhances system organization, maintainability, and scalability.

- User Interface Layer: Interacts directly with users through graphical or command-line interfaces.

- Application Layer: Hosts application programs that perform specific user-oriented tasks.

- Operating System Layer: Manages hardware resources and provides a platform for applications to run.

- Hardware Layer: Executes low-level operations and processes machine instructions.

Core Functions of an Operating System

Operating systems perform a multitude of functions essential for the optimal operation of computer systems. Below are the key areas where the OS plays a critical role.

1. Process Management

Definition: Process management involves creating, scheduling, and terminating processes. A process is an instance of a program in execution.

Responsibilities:

- Process Creation and Termination: The OS handles the initiation and closure of processes.

- Scheduling: Determines the execution order of processes using algorithms like First-Come, First-Served (FCFS), Shortest Job First (SJF), and Round Robin.

- Resource Allocation: Assigns necessary resources to processes, such as CPU time and memory space.

- Process Synchronization: Coordinates processes that share resources to prevent conflicts.

2. Process Synchronization

Definition: Process synchronization ensures that multiple processes can operate concurrently without interfering with each other when accessing shared resources.

Mechanisms:

- Semaphores: Variables used to control access to common resources by multiple processes.

- Mutexes: Mutual exclusion objects that prevent simultaneous access to a resource.

- Monitors: High-level synchronization constructs that allow safe access to shared data.

Importance:

- Data Consistency: Prevents race conditions where the outcome depends on the sequence or timing of uncontrollable events.

- Deadlock Avoidance: Implements strategies to prevent situations where processes are waiting indefinitely for resources.

3. Memory Management

Definition: Memory management involves the handling of memory allocation and deallocation for processes during their execution.

Functions:

- Tracking Memory Usage: Keeps records of which parts of memory are in use and by which processes.

- Memory Allocation: Assigns memory spaces to processes as needed.

- Virtual Memory: Uses techniques like paging and segmentation to extend apparent memory beyond physical limits.

Benefits:

- Efficient Resource Utilization: Maximizes the use of available memory.

- System Stability: Prevents memory leaks and fragmentation.

4. CPU Scheduling

Definition: CPU scheduling is the method by which the OS decides which process in the ready queue should be allocated CPU time.

Scheduling Algorithms:

- First-Come, First-Served (FCFS): Processes are scheduled in the order they arrive.

- Shortest Job First (SJF): Processes with the shortest execution time are scheduled next.

- Priority Scheduling: Processes are scheduled based on priority levels.

- Round Robin: Each process is assigned a fixed time slot in a cyclic order.

Objectives:

- Maximize CPU Utilization: Keep the CPU as busy as possible.

- Minimize Waiting Time: Reduce the time processes spend in the ready queue.

- Fairness: Ensure equitable distribution of CPU time among processes.

5. File Management

Definition: File management involves the systematic handling of files and directories in the storage system.

Capabilities:

- File Creation and Deletion: Allows users and applications to create and remove files.

- Directory Management: Organizes files into directories for efficient navigation and access.

- File Access Control: Implements permissions to restrict or grant access to files.

System Organization: A hierarchical file system enhances data organization, making it easier for users to locate and manage files.

6. Security

Definition: Security in operating systems involves protecting the system’s resources and data from unauthorized access and malicious threats.

Security Measures:

- Authentication: Verifies the identity of users accessing the system.

- Authorization: Determines the access levels of authenticated users.

- Encryption: Protects data confidentiality during storage and transmission.

- Firewall and Antivirus Programs: Guards against external threats like malware and hacking attempts.

Importance:

- Data Protection: Safeguards sensitive information from breaches.

- System Integrity: Ensures that system configurations and resources are not tampered with.

The operating system is a critical component that orchestrates the complex interactions between hardware and software, enabling users to perform tasks effectively. As a Project Manager in the DevOps sphere, a deep understanding of operating systems enhances the ability to lead projects that deliver high-quality software solutions efficiently.

By mastering concepts such as process management, synchronization, memory management, CPU scheduling, file management, and security, professionals can optimize system performance and align development practices with organizational goals. Emphasizing quality at each point ensures that the end products are reliable, secure, and meet the evolving needs of users.

References

- Silberschatz, A., Galvin, P. B., & Gagne, G. (2018). Operating System Concepts (10th ed.). Wiley.

- Stallings, W. (2018). Operating Systems: Internals and Design Principles (9th ed.). Pearson.

- Bass, L., Weber, I., & Zhu, L. (2015). DevOps: A Software Architect’s Perspective. Addison-Wesley Professional.